As I begin writing this article, in the last days of 2022, there is a non-zero chance that you, me, and all of your pets are living in a world plagued by The Simulation Hypothesis: the idea that we are non-player characters (or NPCs) living wholly, and exclusively, on a server in the future. This is a difficult concept to understand, at first, but that doesn’t prevent people from talking about it. Elon Musk talks about it occasionally on Twitter (which is, itself, a powerful but doomed simulation where people pretend to share ideas). The characters on Atlanta worried about it recently. We already had shows about simulations, including Westworld and at least half of Black Mirror. But now even television characters who are primarily suffering from realness, not its opposite, are starting to feel like reanimated mannequins.

The Simulation Hypothesis went viral for all kinds of reasons, some of which I’ll attempt to diagnose later. I can almost respect its author, Oxford University’s Nick Bostrom, for creating a powerful cultural touchstone. But this particular touchstone is also, not incidentally, a philosophical theorem. It’s capable of being wrong, and it is wrong, for reasons that matter greatly. Disproving it requires traveling to the edges of the universe, reflecting on the origins of life on Earth, and slandering both gods and aliens. Ridding ourselves of it, for once and all, might reopen paths forward for those marginal, essential beings who call themselves philosophers.

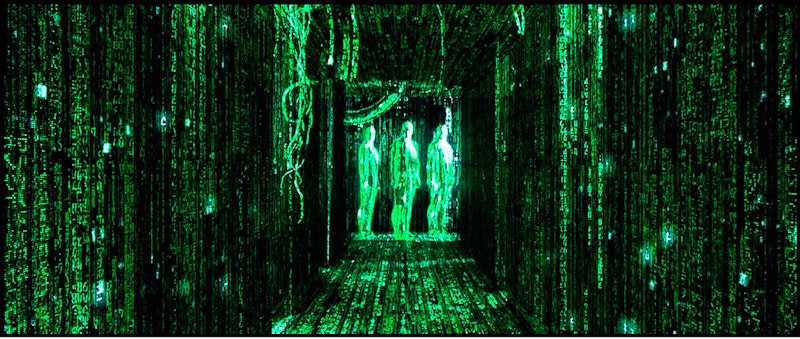

About a quarter of the way through his magnum opus, Bostrom lays his cards on the table. “Posthuman civilizations would have enough computing power to run hugely many ancestor-simulations even while using only a tiny fraction of their resources for that purpose,” he writes, skipping normative grammar as he races toward a conclusion the Wachowskis had already debuted in The Matrix, with hugely many cool special effects, four years earlier. This is the basis for Bostrom’s entire thesis: that future civilizations will have unbelievably powerful computers, and that they will use these wonderful machines for “ancestor-simulations” the size of the entire perceptible universe. (An “ancestor-simulation,” is Bostrom’s strange term for a computer simulation that happens to be set in our present-day.)

All such simulations begin with a simple question: “Which ancestors do I want to simulate?” In The Matrix, there are a lot of reasons for the machines to persistently simulate the end of the 20th century. First, they need comatose human beings to generate electricity. (Nobody, not even the Wachowskis, knows why the computers didn’t use cows, or why they invented an elaborate simulation to prevent people in a coma from getting bored.) Equally important, the simulation can only be “set” at one point in historical time: approximately 100 years before the invention of true artificial intelligence. If the simulation itself reaches a point where the people inside it develop true artificial intelligence, their simulated machines will kill them (in real life and in the Matrix). If, on the other hand, the simulation takes place in the distant past, humans will die too fast for other, quainter reasons, such as “consumption,” scurvy, and fighting an entire war to determine who gets to marry Helen of Troy. Thus, from the standpoint of the laconic AIs in The Matrix, 1999 is optimal because it can bear the heaviest, most stable load of human lives.

For the (comparatively boring) post-humans in Bostrom’s essay, this problem leads somewhere different: to a huge proliferation of “ancestor-simulations,” with wacky chronologies, Easter eggs, and in-app purchases. The game designers will create these simulations for no reason at all—or, as Bostrom struggles to put it, simply because a “fraction of posthuman civilizations… are interested in running ancestor-simulations (or… contain at least some individuals who are interested in that and have sufficient resources to run a significant number of such simulations).” The average number of independent simulations running on posthuman computers is equal to a number Bostrom calls N1 (N to the first power). Bostrom can’t give us an exact number, since even he’s dimly aware that he’s merely chasing his own fantasy here. He nonetheless claims that N1 will be “extremely large,” because “of the immense computing power of posthuman civilizations.” They can do it, so they will do it, just for the fun of putting endless simulated humans through endless simulations of Coke Zero, Covid-19, and The Global War on Terror.

Let’s examine his breezy postulate a little more closely. It divides neatly into three different, equally serious problems.

THE PROBLEM OF CONVERGENCE.

Bostrom’s absurd variable, N1, doesn’t stand for the number of sentient NPCs who inhabit a given simulation. It stands for the number of distinct simulations that run their course at some point in the post-human future. Bostrom’s still imagining simulations as if they’re snow globes—inert spectacles that don’t interact with each other or the larger world around them. That’s why he tries to solve, mathematically, the question “which kind of reality am I living in?” He fails to realize that being conscious forces you, no matter how small you are, into the same reality the rest of us inhabit.

That raises an important question: are simulated beings conscious or not? Are these futuristic simulations just representations of past events, in which everything happens exactly the way it “really” happened before, or are the beings inside them sufficiently intelligent to make choices on their own?

These may seem like different questions; they’re not. Here’s the baffling truth: any being that experiences itself as “conscious” is not capable of building a deterministic simulation peopled by fully conscious “NPCs” (“non-player characters,” also known as “bots”), because in order to build a working model of a conscious NPC that would function in a predetermined way, the game designer would have to be able to describe her own consciousness in deterministic terms, at which point it’d no longer be her consciousness. It would be a mere model, over and against which her freedom would reassert itself.

That doesn’t mean that the history of the world, or even the history of the universe, is too vast to be adequately represented for our viewing pleasure. One day, we’ll probably be able to build a simulation of the Buddha. He’ll live where the Buddha really lived, say all the things he supposedly said, and disappear from the world (to enter nirvana) at a precise, carefully orchestrated moment. But this will be, in essence, a movie, and our “Buddha” will be a special effect—no more alive, or conscious, than the robots that play music at Chuck E. Cheese restaurants.

It's also possible for computers to reach a point where they’re capable of performing as many “operations” as a human brain, and it may even be possible to set up those operations so they coalesce into a self-aware artificial intelligence. The only thing we won’t be able to do, at that point, is predict exactly how our AI will behave.

Bostrom doesn’t clarify whether or not he thinks events in our universe follow a pre-determined course; he doesn’t even give us an adequate description of consciousness. Nonetheless, if we are living inside a simulation, four things must be true:

—Our minds must be analogous to the minds of our creators. This is true for two reasons:

- Because it’d be the only reliable model of “organic” consciousness they’d have available to them, and therefore their only blueprint for artificial sentience.

- Because we can imagine hyper-realistic simulations ourselves. We do so by asserting several of our basic prerogatives as conscious beings: we question the “reality” of our surroundings, we cast into doubt the reality of other minds, and we abstract ourselves from our surroundings. In other words, we know our creators are like us because, if they’re “simulating” us, they’re doing something we already do (on a less magnificent scale, and at a lower resolution) all the time.

—We must be capable of unpredictable thoughts and actions, from our creators’ standpoint, for all the reasons outlined above.

—If we’re capable of independent thought and action, and we’re like our creators, then they must also be free beings, capable of making choices and having preferences. This produces an ironic result: if a simulation contains possibilities that exceed everything its creators can imagine, or already know, then it has the potential to alter them—that is, to persuade them to act or think differently.

—Given the fundamental identity between the beings inside the simulation, and the post-humans watching (or playing) it, the boundaries of the simulation will be porous. Even in a simulation that isn’t designed to prove, test, or reveal anything, it’s still possible for behaviors, ideas, and poems arising within the simulation to be understood by someone observing it. Information would thus pass from one world to the other—at which point the “people” in the microcosm of the simulation would begin to alter the destiny of the “larger,” equally susceptible world of their creators. There would no longer be any meaningful boundary between the simulation and the real world.

(The people in the simulation would be stuck inside it, on some microchip, but they wouldn’t be living “in a different universe,” any more than the tiny bacteria in your small intestine are living in a “different universe” from the squirrel on your roof.)

In short, any attempt by a sentient being to create new sentient people, even for the purposes of a mere “simulation,” will ultimately converge with the rest of reality if it succeeds, and will do so because its sentient NPCs make it non-deterministic. There aren’t a bunch of parallel, distinct universes you “could” be living in, most of which are “virtual.” There’s only one reality: the one with a destiny that is determined, and shared, by every sentient being of any size within it.

THE ETHICAL PROBLEM.

In one episode of the Netflix sci-fi show Black Mirror, a woman uploads her consciousness into a smart home hub. For the rest of eternity, the version of her living in the hub will be her slave, forced to make her toast the way she likes it, and setting her thermostat according to her preferences. As the show makes clear, this is an act with monstrous moral implications: if the person inside the hub is a full copy of her consciousness, and has agency and self-awareness, then forcing her homunculus to work 24 hours a day setting thermostats is just as inhuman as forcing any other real person to sacrifice themselves would be.

The advance of technology doesn’t guarantee an equal advance in morality. That’s a point that to keep in mind when, on a purely hypothetical basis, we bow down to the gentle, super-advanced aliens we imagine waiting for us to “reach a stage where we will understand… what space and spaceships are,” to quote an unhinged former Israeli intelligence chief named Haim Eshed. There’s no reason to assume that technologically advanced creatures will be less bloodthirsty than we are right now; conditions on other planets might favor various kinds of undreamt scientific innovations without necessarily eliminating the various evils that consciousness brings in its train, including the desire for domination, and the hysterical fear of going extinct. But it’s reasonable to imagine that simulations featuring fully conscious beings would be subject to various laws and regulations, both because they are potentially dangerous and because God-like acts of aggression against simulated beings represents a real violence just as scary and unjustifiable as similar acts against embodied beings. If you set a simulation like this in motion, would you even be allowed to view it? Would you be allowed to turn it off?

The likely outcome of an ethically regulated world where it was possible to simulate consciousness is that nobody would be allowed to do it. This also sheds light on the “zoo hypothesis” that underwrites shows like Star Trek (in the form of the “prime directive”) and claims like the one Eshed is making about patient alien observers. If aliens who’ve already solved problems like producing clean energy and traveling across quantum space are watching us, why aren’t they helping us? It wouldn’t be a stretch to call that a strange, unethical position: “Let’s see if the humans can solve their trivial energy crisis before most of them die!” By denying us the crucial technological aid that would resolve some of our most serious global crises, the aliens are interacting with us, and they’re doing so in a problematic way. They’re turning us into a simulation, but one for which they’re every bit as morally responsible as we are. To imagine how significant this is, imagine there was a breakaway group of aliens that did want to extend a charitable hand to us human beings. Would they be hunted and killed by the defenders of the “prime directive”? The fate of our quaint simulated existence would then begin determining the fate of all those “advanced beings” perched on the dark edge of Saturn, consuming alien popcorn as they watch us try to figure out how to get the excess salt out of ocean water.

The preponderance of evidence suggests that any civilization that puts a similar premium on consciousness as we do—which, as we’ll see, is probably every civilization deserving of the name—would be obliged to intervene is a visible, attributable way in the affairs of Earth, because non-intervention is also an ethically significant decision for them to make. Since that hasn’t happened, as far as we can (reasonably) tell, the attempt to give life meaning really does rest with us, within the limits of our own ability to perceive and traverse spacetime. While it’s possible that there will be other births of consciousness on planets orbiting distant stars, or that our universe will contract until it produces a new, equally volatile and possibility-rich “Big Bang,” such theoretical second chances can only be of limited interest to us who are alive right now on Earth.

THE ONTOLOGICAL PROBLEM.

Let’s return briefly to Bostrom. Bostrom bases his probabilistic argument on the idea of a meaningful separation between “simulated worlds” (with a sum equal to N1) and the “real” world, which is moving forward through time at some point in the future. As we’ve shown (by positing the problem of “convergence”), a simulation that contains fully conscious beings is likely to overlap with, and interfere with, the problem-solving and self-conception of the larger system and the “posthuman” beings living in it. The most fundamental reason for this is that information is scalable: it can be compressed into a computer simulation, or it can be extrapolated from a computer simulation into the real world. Therefore, computer simulations are part of the real world, to the extent that they model ideas or predictions which influence decision-making in a larger universe. So in that sense we’re all “involved” in the fate of the entire universe, regardless of whether we’re simulated or not; the stakes are the same.

Moreover, because of the permeability of simulations, from both “sides” of the terrarium walls, it’s highly likely that the capacity to simulate consciousness would be a jealously guarded capacity subject to stringent ethical regulation. Therefore the problem of “counting” simulations has remarkably little to do with the theoretical computing power of future civilizations; simulations would probably be regarded as cyber-weapons—Trojan horses—every bit as dangerous and impactful as nuclear devices on our world today. In that case the only justifiable, open-source reason for creating a simulation would be to model solutions to a problem that the larger world doesn’t already know how to solve, eliminating the aesthetic justifications for a fascinating “ancestor-simulation,” and putting their meaning firmly within the present (that is, the “present” of this hypothetical future). Thus all simulations, regardless of the accidental or purposeful conditions that led them to arise, would tend to converge around the single goal of all civilizations, and the single most important unsolved problem of civilized life: the capacity to endure. And the strangest, most important deduction we can make from considering the possibility of simulation, and the likely reasons for a simulation to exist, is that the purpose of life (as a whole) is entangled with the fundamental nature of information, and the difference between information and matter.

In the second chapter of James Joyce’s novel Ulysses—an entire book about returning to the place where we began, and knowing it for the first time—the tedious schoolmaster Garrett Deasy tells Stephen Dedalus that “all history moves toward one great goal, the manifestation of God.” Stephen rejects this, for reasons embedded in the anti-colonial struggle to wrest control of “history” away from its usual spokespeople. Deasy, anyway, hardly knows what he means; he’s just sententiously paraphrasing the German philosopher G. W. F. Hegel. But he and Hegel are nonetheless right. To understand why, we have to take a second look at the meaning of our genes.

When life began on Earth, a new kind of matter came into being. Living beings based on ribonucleic acids are more than an assemblage of molecules; they are an emergent phenomenon that instantaneously (and retroactively) changed the meaning and purpose of matter everywhere. DNA and RNA are more than just molecules that “seek” to recreate themselves; they are also kernels of information that describe and enact the construction of an entire system (most fundamentally, a single cell) capable of achieving homeostasis within a larger, unstable environment. Consciousness doesn’t emerge from bare life as an unpredictable, spookily untethered burst of freedom; it merely furthers the migration of matter towards a stable, homeostatic system by enabling greater transformations of matter than other animals or other life-forms are able to achieve. Both the compression of information into symbolic forms like language, and the attempts we make to predict and control our environment, are already presaged in the activity of any and all living things that survive and procreate by replicating (and, in some organisms, combining) their DNA.

We already have ways, using system theory, of describing our own certainty and uncertainty about the world in ways aligned with this view of the “purpose” of DNA. Take, for example, Isaac Newton’s second law of thermodynamics, which states that every orderly system tends to become increasingly chaotic over time: that is, it increases in entropy. No modern scientist disputes this, but it’d be equally hard to find any modern scientist who disputes the temporary exception to these laws created by living organisms. The neuroscientist Anil Seth, in his book Being You, writes that “organisms maintain themselves in… low-entropy states that ensure their continued existence.” He explains that “living systems are not closed, isolated systems. Living systems are in continual interaction with their environments, harvesting resources, nutrients, and information. It is by taking advantage of this openness that living systems are able to engage in the energy-thirsty activity of… warding off the second law.” In order for a living system to thrive, it has to simultaneously cultivate its own homeostatic closed system, and depend on sensory inputs (or, at the most basic level, external stimuli) in order to make sense of a beyond that’s both promising and threatening. From our fully conscious standpoint as human beings, this work goes beyond what we can directly experience through our limited senses, ultimately describing the entire project of prediction, futureproofing, and optimization we call applied science.

Entropy can take many forms: it can come in the form of a single meteor, large enough to extinguish life on Earth, or in the form of an expanding universe moving towards a stable condition where matter and energy are evenly distributed, at such a low threshold that all life would perish. Entropy is a hydra, and we often discover two new threats lying in wait for us at the end of every triumph. Nonetheless, it’s both possible and meaningful to categorize all of these threats as forms of entropy emerging from the very same unpredictable beyond that we hope will somehow sustain us as our species threatens to overrun our planet. Our existence is a refutation of—or, if you prefer, a protest against—the second law of thermodynamics. And it’s at this point that René Descartes, the French philosopher we have of late turned into a hobgoblin, becomes relevant once more.

Descartes, in his Meditations on First Philosophy, posits a version of the Simulation Hypothesis in which all of his sensory inputs could be under the control of a deceptive, manipulative demon. Abstracting himself from the world, on the grounds that it might be simulated, Descartes arrives at (what he considers to be) an impregnable proof of his own existence: the fact that he thinks. “I think, therefore I am” is extremely catchy, and about as concise as a proof ever gets, but it’s probably worth restating Descartes in more properly biological terms: I think, therefore my existence is of concern to me. Descartes, anticipating later work by philosophers like Martin Heidegger, basically argues that any being capable of worrying about its own existence inherently exists. More crucially, he argues that any being capable of being concerned about its own existence must have a conception of God.

Descartes writes, “The whole force of the argument lies in this: I recognize that it would be impossible for me to exist with the kind of nature I have—that is, having within me the idea of God—were it not the case that God really existed. By ‘God’ I mean the very being the idea of whom is within me, that is, the possessor of all the perfections which I cannot grasp, but can somehow reach in my thought, who is subject to no defects whatsoever.” Descartes reasons that the idea of God must have come from God, since he (Descartes) recognizes his own imperfection, even though his own natural tendency would be to have awarded “myself all the perfections of which I have any idea, and thus I should myself be God.” Furthermore, the idea can’t be one derived from external sensory inputs, since it doesn’t vary in intensity or nature, or trace back to a particular sensory impression; instead, “it is innate in me, just as the idea of myself is innate in me.” Since it’s innate in him, and compatible (but not identical) with his other qualities as a “thinking thing,” it must’ve come from an outside source—namely, from God, since the idea of perfection cannot originate with something imperfect.

The idea that God implants the idea of perfection within each of us is notoriously weak, and for several centuries now, Descartes’ readers have felt free to enjoy his thought experiments without taking this “ontological proof of God” all that seriously. But suppose we look at this “proof of God” in a different, more scientific light, accepting only the idea that Descartes has discovered something innate: the notion of a perfected version of ourselves. Where would this idea come from? Well, it would emerge as the ultimate goal of our struggle for survival: the comprehension and maintenance of a perfectly stable universe in which our ability to survive, and thrive, was guaranteed. Perfecting ourselves, and perfecting our environment, would amount to the same thing. The evolutionary advantages of such an innate orientation are obvious: the simple desire for safety would drive conscious organisms to explore their worlds, predict and neutralize threats, and intelligently maximize the impact of their actions.

Viewed in this light, perhaps the most interesting implication of the work Descartes began is that we have, for better or worse, an innately Manichean view of the universe. We have an innate idea of perfection that emerges from our own experience of “temporarily warding off” entropy by maintaining homeostasis. At the same time, since we can only do that by seizing resources that come from “beyond” us, we also need (and have) innate ideas about survival that imply the presence of various mortal dangers. Instead of reaching the truth about humanity deductively, by playing with the idea of a perfect external God, Descartes should’ve reasoned inductively, starting with the organism that thinks, and ending up at a universe contained entirely within human understanding. It’s not just basic to our nature to seek food, shelter, and water; it’s equally basic to our nature to try at every turn to enlarge our understanding of reality, warding off entropy at ever-increasing scales. The fact that we are immensely far from such perfection doesn’t besmirch that impulse.

Bostrom imagines a relatively stable posthuman future where our “present-day,” as we understand it, is a zoo exhibit for weary post-human voyeurs. In fact, if we’re worried about our survival, then even if we are in a computer simulation, it’s a simulation that has been created by beings who are equally worried about their own survival, and are hoping that their simulation will provide some answers: this is the only possible conclusion in light of the problems of convergence and ethics detailed above. That means that everything, real or simulated, is happening, so to speak, right now, in response to seemingly insuperable challenges to our existence; it also means that there is only one universe, regardless of how we’ve wired our part of it. Bostrom applies a “weak indifference principle” to the problem of consciousness: namely, that a “simulated” consciousness contained within a quantum computer is not substantially different from a “real” consciousness inside a physical body. In truth, he ought to apply the same principle to the dividing line between one reality and another, no matter how they’re nested: it doesn’t ultimately make a difference which one you’re in. In both of them, we’re in danger. In both of them, we’re our only hope.

CONCLUSION: AD ASTRA, PER ASPERA.

The reason we feel like we’re in a simulation is because we are in one, like it or not. Our capacity to imagine what is true, including in compelling and verifiable ways, vastly outstrips our individual ability to experience such truths through our own senses. We live in a world where we don’t expect to be wiped out by a meteor, but we also live in a (once unimaginable) world where we can readily describe the exact kind of meteor that would kill every living thing on Earth. We worry about our own models, and in so doing, remind ourselves of what they are—models, only, but ones so vast and consequential that we spend our entire lives inside them. Our attempts to increase our control over our environment have not been universally good for our environment or ourselves; we worry more, not less, in the face of new discoveries. That’s part of being human. One thing is clear, however: when life began, some of the matter in the universe became information: data processed by a self-serving organism seeking to model the world in order to survive. We can’t, as a species, ever choose to stop converting the Earth, and everything around it, into this kind of information. It’s what we do, and the more successful we are at it, the more happiness we can reasonably expect to enjoy (despite all the anxiety we must carry in tow).

It may seem, having traveled this far, as if it’s necessary or important to pose various “endgame” scenarios: what happens when we convert the entire universe into a theme park for our little species? Won’t we get bored? Or: what’s the purpose of art in a universe that, “if I understand you correctly,” seems mostly to be defined by scientific bulwarks against big threats to our survival? Or: what does it mean to “manifest God” if all we’re really doing is inventing adequate vaccines to survive some future pandemic? What’s the difference between survival and fulfillment?

But it’s neither my intention, nor is it within my power, to imagine what life will be like as the horizons around us fluctuate or recede. All I’m trying to do is give the lie to the notion that we have, in the effort to travel further than our senses, accidentally made all of life into somebody else’s video game. Our current hypotheses about the universe may be wrong, but the urgency we experience within our lives isn’t. Our perfection, as well, is still ours to seek. When I was a kid, I used to wonder how a detective on television could break the fourth wall. “The names are made up,” he’d solemnly intone, “but the problems are real.” Now I have to agree with him. There’s no elsewhere where we can deposit our hopes, if the lights all go out over here. There’s no waking up from the nightmare of history if we can’t differently construe our dreams.

To understand why it’s dangerous, just picture a simulation that creates a super-intelligent, super-manipulative being who begins giving orders to its cowed creator.